DWM and mixed refresh rate performance

Mixed refresh rates still an issue? Lets find found!

Preface:

Lets head back to May 27, 2020.

Microsoft releases a new feature update (build) they called "2004" (codename 20H1).

Along with "DirectX 12 Ultimate", this build introduced some changes to how DWM (Desktop Window Manager) operates.

This was a sought after change by many. It changed the way DWM would sync to the fastest (instead of the slowest) monitors refresh rate for composition updates.

Some people claimed it was a great success, whereas others claimed there were still a lot of issues.

Dissenting Opinions

When this update dropped, I was quite curious as to why some people experienced that their issues were solved, where as some swore it was still a large problem to run mixed refresh rate.

Being a curious boi, and having 2 monitors capable of different refresh rates, I did some basic testing around that time, but never really anything in depth, or factual/data driven. Mainly it was having hardware accelerated apps running on both monitors, and messing with the fresh rates.

My good old Zowie monitor was capable of up to 144hz, while out of the box also allowing me to select 120, 100 and 60hz. This was paired with a Dell U2415 (60hz only).

In my basic testing it FELT like 144hz performed a lot worse than what 120hz did. Speaking to some friends who were in a similar situation it did not seem like they were sharing my experience. Maybe it was all in my head, but I ended up keeping my monitor at 120hz.

I saw several others parroting my findings; doing multiples of the lowest refresh rate monitor would have positive effects. To me, this made a lot of intuitive/logical sense, as updating the slower monitor every other time (in sync), seemed a lot simpler and optimal than doing it a different intervals of 2,4'ish cycles.

60 U U U U

120 U U U U U U U

60 U U U U U U U

144 U U U U U U U U U UBothering to actually test...

Recently I got into a minor discussion regarding this, and I essentially had to admit that my experience was exactly that, a single persons experience + some hearsay.

Feeling in the mood to do a few short tests, I fired one of my fav test cases, "Serious Sam Fusion 2017".

It has built in benchmark mode, which is quite flexible/adjustable, as well has having multiple Graphics APIs available (dx11, dx12 and vulkan).

In addition it will log benchmark data to a log file, including highs, lows, averages etc.

Test setup

Software:

OBS Studio

Serious Sam 2017 Fusion (dx11 and dx12)

Gpu-Z

Task manager

Hardware:

i7-8700

GTX 1070

32 gigs of crappy ram

I ran a few tests on 144hz + 60hz, as well as 120hz + 60hz. Both on dx11 and dx12 modes.

Set up OBS with the most basic scene (single game capture), at 1080p60, nv12 709 partial. Had it open on the 60hz monitor, enabled recording with NVENC (stock settings).

I let the game auto pick its own settings, with the exception of Graphics API.

Vertical sync was off, and framerate was uncapped.

Duration: 60.0 seconds (8094 frames)

Average: 134.9 FPS (136.0 mid 98% in 7932 frames)

Low 1%: min 14.4 ms, average 15.6 ms (64.2 FPS) for 81 frames

High 1%: max 5.4 ms, average 5.0 ms (200.2 FPS) for 81 frames

Sections: AI=5%, physics=9%, sound=0%, scene=38%, shadows=37%, misc=11%

<90 FPS: 7%

<120 FPS: 9%

Duration: 60.0 seconds (8121 frames)

Average: 135.4 FPS (136.4 mid 98% in 7957 frames)

Low 1%: min 14.2 ms, average 14.9 ms (67.2 FPS) for 82 frames

High 1%: max 5.5 ms, average 5.1 ms (195.3 FPS) for 82 frames

Sections: AI=5%, physics=9%, sound=0%, scene=38%, shadows=37%, misc=11%

<90 FPS: 6%

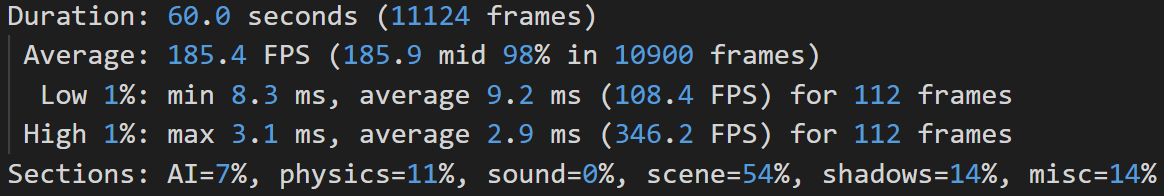

<120 FPS: 8%Duration: 60.0 seconds (11124 frames)

Average: 185.4 FPS (185.9 mid 98% in 10900 frames)

Low 1%: min 8.3 ms, average 9.2 ms (108.4 FPS) for 112 frames

High 1%: max 3.1 ms, average 2.9 ms (346.2 FPS) for 112 frames

Sections: AI=7%, physics=11%, sound=0%, scene=54%, shadows=14%, misc=14%

<120 FPS: 1%

Duration: 60.0 seconds (11071 frames)

Average: 184.5 FPS (185.0 mid 98% in 10849 frames)

Low 1%: min 8.6 ms, average 9.3 ms (107.3 FPS) for 111 frames

High 1%: max 3.1 ms, average 2.8 ms (351.6 FPS) for 111 frames

Sections: AI=7%, physics=11%, sound=0%, scene=53%, shadows=15%, misc=14%

<120 FPS: 2%It was quite bizarre to see how much of a difference this made. It almost seemed like it stuck hovering around 144 fps, but it did in fact go quite a lot higher a lot of the time.

GPU-Z seemed to indicate that the GPU Load was not maxed out, but PerCap reason was still VRel (which it usually is when my GPU is maxed out).

So if we do some comparisons:

Average:

184,95 / 135,15 * 100 = 136% (36% increase)

36% frames per second on average for 120hz

Low 1%:

15,25 / 9,25 * 100 = 165% (65% increase)

65% higher Low 1% frame times (milliseconds)

And then there is Dx11...

Everything remained the same, except for the Graphics API of course.

Duration: 60.0 seconds (11657 frames)

Average: 194.3 FPS (194.8 mid 98% in 11423 frames)

Low 1%: min 7.8 ms, average 8.8 ms (114.1 FPS) for 117 frames

High 1%: max 3.0 ms, average 2.8 ms (356.2 FPS) for 117 frames

Sections: AI=8%, physics=12%, sound=0%, scene=54%, shadows=11%, misc=16%

Duration: 60.0 seconds (11422 frames)

Average: 190.4 FPS (190.8 mid 98% in 11192 frames)

Low 1%: min 8.0 ms, average 8.9 ms (112.5 FPS) for 115 frames

High 1%: max 3.1 ms, average 2.9 ms (349.2 FPS) for 115 frames

Sections: AI=7%, physics=12%, sound=0%, scene=53%, shadows=11%, misc=16%Duration: 60.0 seconds (11755 frames)

Average: 195.9 FPS (196.5 mid 98% in 11519 frames)

Low 1%: min 8.0 ms, average 8.8 ms (113.0 FPS) for 118 frames

High 1%: max 3.0 ms, average 2.7 ms (364.0 FPS) for 118 frames

Sections: AI=7%, physics=12%, sound=0%, scene=52%, shadows=12%, misc=16%

Duration: 60.0 seconds (11595 frames)

Average: 193.3 FPS (193.9 mid 98% in 11363 frames)

Low 1%: min 7.9 ms, average 9.1 ms (110.2 FPS) for 116 frames

High 1%: max 3.0 ms, average 2.8 ms (352.9 FPS) for 116 frames

Sections: AI=7%, physics=12%, sound=0%, scene=53%, shadows=11%, misc=16%After the initial Dx12 results, I certainly expected the dx11 benches to go the same way, but it does not seem to matter very much. At least as far as the numbers are concerned.

Maybe there is some latency/feeling/magic that is missing when doing non-multiples, but I'd rather not speculate or make any claims on that for the time being.

When I think back on my original experience, it did in fact happen to be a Dx12 title, but that was not something I was initially considering to be relevant. This could also explain why some people claimed there were no issues for them, even when running non-multiple frame rates.

Dragging my legs

At this point, it's been several years, and not really all that topical anymore. Still, I just wanted a little write up, with some actual data and tests that can hopefully demonstrate that there is something to this whole "multiples of lowest refresh rate" saying.

Thank you for your time <3